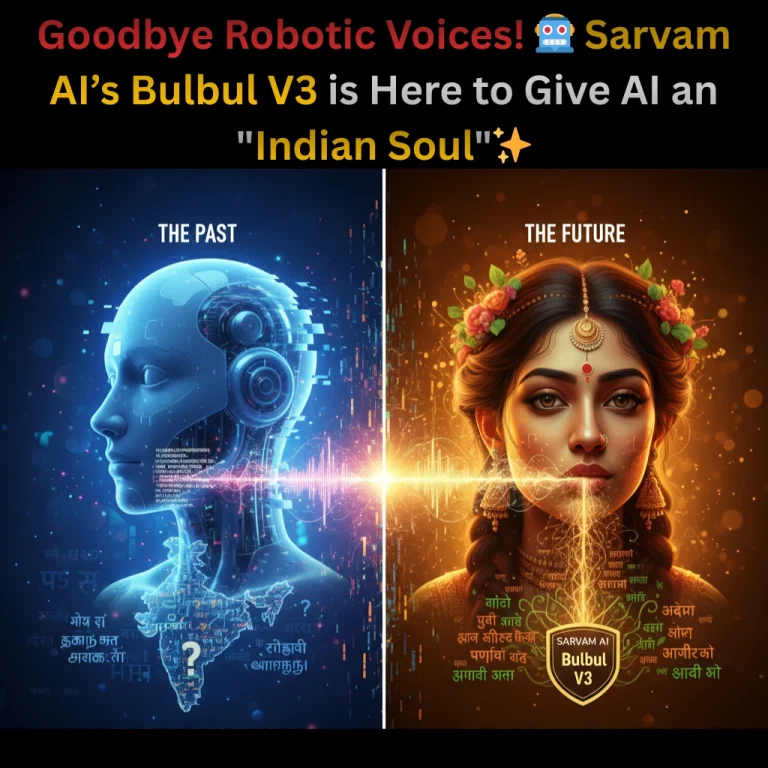

Can a machine truly understand the “Indian-ness” of a conversation? If you’ve ever winced at a robotic voice trying to pronounce a local street name or butchering the rhythm of a regional dialect, you know the struggle. But the gap between silicon and soul just got significantly smaller.

Sarvam AI, the homegrown startup quickly becoming the poster child for “Sovereign AI,” has just pulled the curtain back on Bulbul V3. This isn’t just another incremental update; it’s a sophisticated text-to-speech (TTS) engine designed specifically for the linguistic tapestry of India.

The Death of the Robot Voice

Why does most AI sound so… artificial? Usually, it’s because models are trained on English datasets and then “retrofitted” for Indian languages. They miss the emotional cadence, the subtle rise and fall of pitch, and the crucial human-like pauses that signal empathy or excitement.

Bulbul V3 changes the narrative. By focusing on 11 major Indian languages, Sarvam AI has built a model that understands tone modulation. Whether it’s the lyrical flow of Bengali or the rhythmic punch of Punjabi, the audio produced is professional-grade and remarkably natural.

What makes Bulbul V3 stand out?

- Human-like Pauses: It doesn’t rush through sentences. It breathes, pauses, and emphasizes words just like a real person would.

- Real-time Latency: The model is optimized for speed, meaning it can generate audio almost instantly-a game-changer for customer service bots and interactive apps.

- Cross-Linguistic Mastery: It handles code-switching (mixing English with Hindi or other regional tongues) without losing its “accent.”

Beyond Translation: Why Language Sovereignty Matters

Is it enough to just translate English AI into Hindi? Not quite. Language is culture, and for AI to be truly useful for the next billion users, it needs to be culturally grounded.

Sarvam AI’s approach with Bulbul V3 aligns with a larger global trend: the rise of specialized, localized models over “one-size-fits-all” giants. By training on high-quality, diverse Indian datasets, Bulbul V3 avoids the “translation lag” that plagues Western models.

This is particularly vital for sectors like EduTech, Rural Banking, and Healthcare. Imagine a farmer in Maharashtra receiving real-time crop advice through a voice that sounds like a trusted neighbor rather than a cold, distant computer. That level of trust is what Sarvam AI is aiming for.

Scaling the “Voice-First” Economy

India has always been a voice-first market. From WhatsApp voice notes to searching on Google via the mic icon, Indian users prefer talking over typing. Bulbul V3 is the engine that could power this entire ecosystem.

Key Use Cases for the New Model:

- Hyper-Personalized Content: Media houses can now automate news bulletins in multiple languages without losing the “journalist’s tone.”

- Accessible Education: Complex textbooks can be converted into engaging audiobooks that sound like a teacher is reading them.

- Seamless Customer Support: Brands can deploy voice bots that don’t frustrate customers, leading to better retention and faster resolution.

Final Thoughts: The Future is Multilingual

We are moving away from the era of “General AI” and into the era of “Contextual AI.” Sarvam AI’s Bulbul V3 isn’t just a technical achievement; it’s a bridge. It connects the high-tech world of LLMs with the lived reality of millions of Indians who communicate in their mother tongue.

Will this finally end the “Uncanny Valley” of AI voices in India? It certainly feels like it. As Sarvam AI continues to refine its stack-from BharatGPT to Bulbul-the message is clear: The future of the Indian internet won’t just be seen; it will be heard, and it will sound remarkably human.

What do you think-would you be able to tell the difference between Bulbul V3 and a real human narrator? The line is getting thinner by the day.

FAQs

Find answers to common questions below.

Can Bulbul V3 actually handle "Hinglish"?

Yes! Unlike older models that struggle with mixed languages, Bulbul V3 is designed for the way Indians actually speak, seamlessly blending English terms with regional dialects without losing its natural cadence.

Is the audio generated in real-time?

Absolutely. One of the standout features of the V3 update is its low latency, making it fast enough for live customer support and interactive voice bots.

Which 11 languages are supported?

The model covers major regional powerhouses, including Hindi, Bengali, Punjabi, Gujarati, Marathi, Telugu, Tamil, Kannada, Malayalam, and more, ensuring wide coverage across the subcontinent.

How does "tone modulation" work in this model?

Instead of a flat delivery, Bulbul V3 analyzes the context of the text to add emphasis, emotional weight, and human-like pauses, making it sound more like a storyteller and less like a machine.