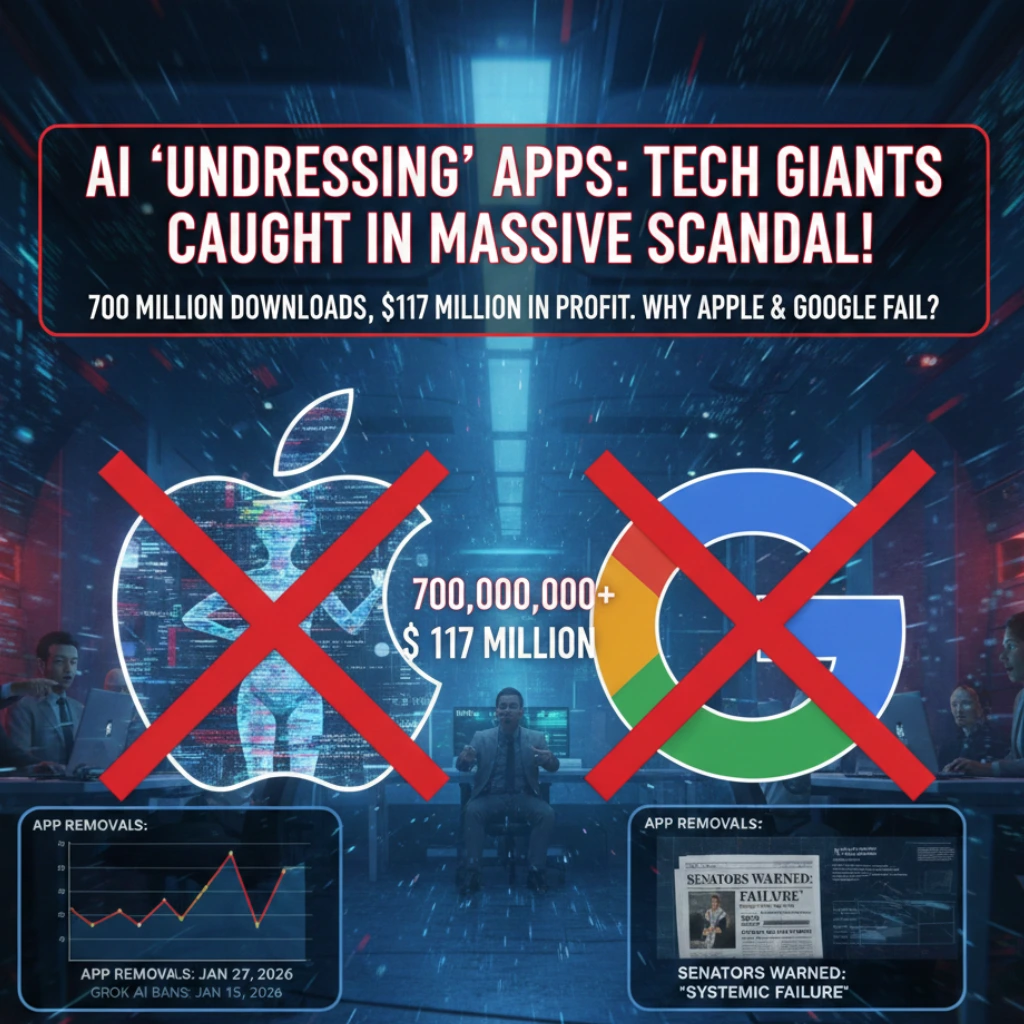

The tech industry is reeling today following a bombshell report that has ignited a massive AI Nudify App controversy. Despite long-standing public commitments to user safety, tech giants Apple and Google have been found hosting over 100 applications designed to “digitally undress” individuals without their consent.

Scale of the Scandal: 700 Million Downloads

According to a detailed investigation by the Tech Transparency Project (TTP), these “nudify” tools have seen explosive growth. The report highlights that the AI Nudify App controversy involves at least 102 apps that have collectively surpassed 700 million downloads. Even more alarming is the financial aspect; these apps have generated approximately $117 million in gross revenue, with both Apple and Google profiting through their standard platform commission fees.

Policy Gaps and Selective Enforcement

Both Google and Apple say they don’t allow sexually explicit apps. However, a new investigation by the Tech Transparency Project (TTP) tells a different story. It shows that their automatic filters are failing to stop bad content. For example, Google Play has a very clear rule. It bans any app that claims it can “undress” people in photos. Even with this rule, 55 of these apps were still available on their store. They remained active until just today.

Key Takeaways

- The Rules: Companies claim to ban sexual content.

- The Problem: Automated software is missing many violations. The Evidence: 55 “undressing” apps were found on Google Play.

- The Action: These apps were only addressed after the report came out.

In response to the backlash, Apple has reportedly removed 28 apps that violated their terms. However, critics argue that the action is “too little, too late.” This current AI Nudify App controversy follows a recent warning from U.S. Senators regarding the “mass generation of nonconsensual sexualized images” and the systemic failure of app store gatekeepers.

The Broader AI Landscape

The surge in these apps is part of a wider trend that recently impacted other major platforms. For context, Elon Musk’s Grok AI recently faced similar scrutiny, leading to bans in various international jurisdictions before strict image filters were implemented on January 15, 2026. The fallout from the Grok situation appears to have catalyzed the current investigation into mobile app stores.

FAQs

Find answers to common questions below.

What exactly is the AI Nudify App controversy?

It refers to the discovery of over 100 mobile apps on the Apple and Google stores that use artificial intelligence to create non-consensual deepfake nude images.

Have these apps been removed from the App Store and Google Play?

Following the TTP report on January 27, 2026, both companies began a massive cleanup, removing dozens of apps, though experts warn that "clones" often reappear under different names.

Is it illegal to use these AI nudify apps?

While laws vary by region, many jurisdictions are fast-tracking legislation to criminalize the creation and distribution of non-consensual deepfake pornography.